Are You Making These 7 Common AI Coaching Mistakes That Kill Authentic Connection in 2026?

- Wix Partner Support

- Jan 11

- 4 min read

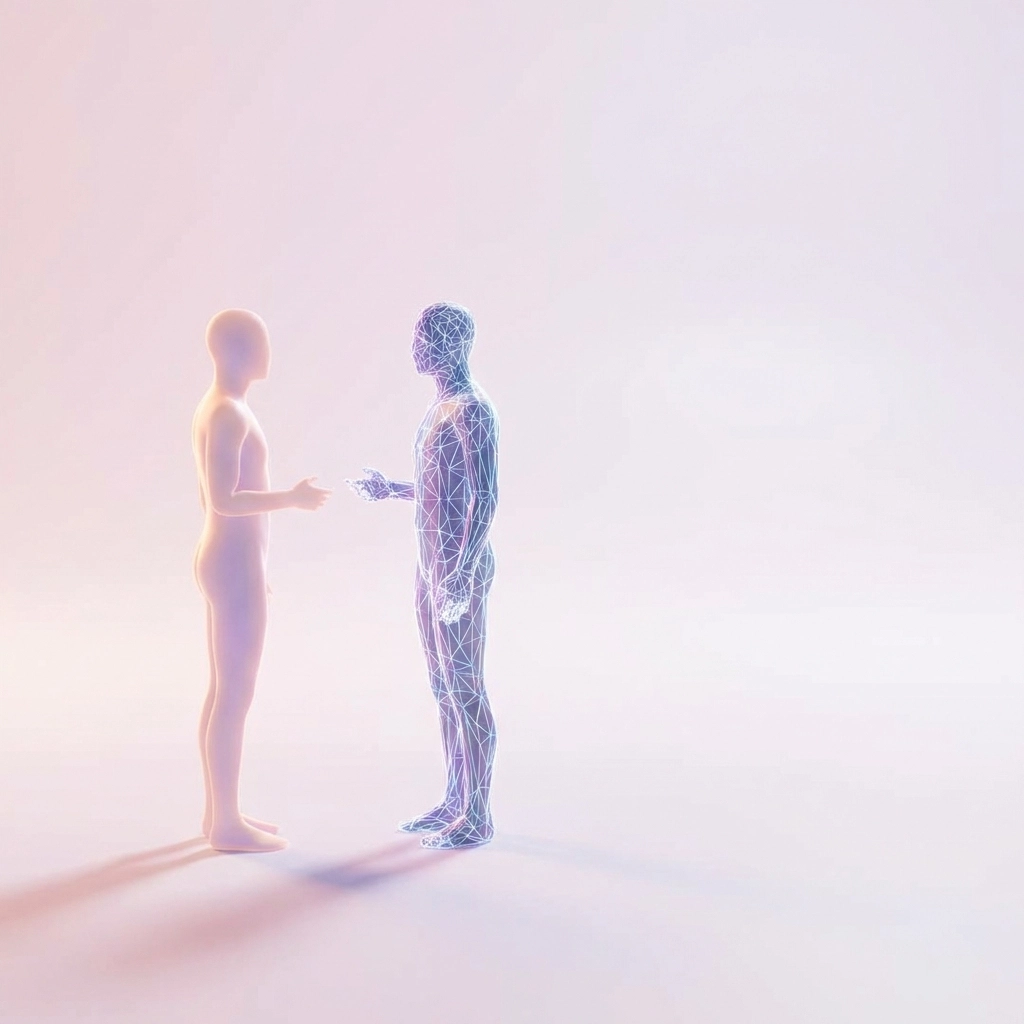

The coaching world is buzzing with AI tools. Everywhere you turn, there's a new platform promising to revolutionize how we connect with clients, streamline our processes, and scale our impact. But here's what nobody's talking about: most of us are using AI in ways that actually damage the very thing that makes coaching powerful: authentic human connection.

As someone who works with LGBTQ+ leaders, therapists, and executives, I've watched brilliant professionals get seduced by shiny AI tools, only to realize they've traded depth for convenience. The cost? Trust, vulnerability, and the kind of transformational breakthroughs that happen when two humans truly see each other.

Let's explore the seven mistakes that are quietly eroding authentic connection in coaching: and what you can do instead.

Mistake #1: Letting AI Replace Deep Listening

You know that moment in a session when your client pauses mid-sentence, their voice catches, and suddenly the real conversation begins? AI misses those moments entirely.

Here's the thing: AI is terrible at listening. It processes words, not the silence between them. It can't hear the tremor in someone's voice when they mention their pronouns for the first time at work. It doesn't catch the way someone's energy shifts when discussing family rejection.

What to do instead: Use AI for prep work: researching industry trends, organizing session notes, or brainstorming questions. But when you're in session? Put the tech aside and listen with your whole being.

Mistake #2: Using AI for Identity-Specific Guidance Without Human Context

I've seen coaches use AI to generate advice for LGBTQ+ clients around coming out at work or navigating microaggressions. The results? Generic responses that miss the nuanced reality of intersectional identities.

AI doesn't understand what it means to be a trans executive in a traditional corporation, or a queer therapist of color trying to build a practice. It can't factor in the complex dance of visibility, safety, and authenticity that marginalized professionals navigate daily.

The reality check: Your lived experience, cultural competency, and ability to hold space for intersectional challenges: that's irreplaceable. AI can help you research best practices, but it can't replace identity-affirming coaching.

Mistake #3: Prioritizing Efficiency Over Emotional Safety

It's tempting to use AI to speed up sessions: auto-generating action plans, suggesting interventions, or even drafting follow-up emails. But here's what we lose: the organic pace of healing.

Trauma-informed coaching requires slowing down. Creating space. Letting clients find their words without rushing toward solutions. When we let AI drive the agenda, we risk retraumatizing clients who've spent their lives having their experiences rushed, dismissed, or pathologized.

Remember: Efficiency isn't always effective. Sometimes the most powerful thing you can do is sit in silence while someone gathers courage to speak their truth.

Mistake #4: Missing Cultural Context and Microaggressions

AI tools trained on mainstream datasets often perpetuate bias. They might suggest communication strategies that work for cisgender, straight, white professionals but fall flat: or even cause harm: for everyone else.

I've seen AI recommend "assertiveness training" for a Latina executive experiencing workplace discrimination, completely missing the double-bind of being perceived as "aggressive" when advocating for herself. The algorithm doesn't understand cultural taxation, stereotype threat, or the exhaustion of code-switching.

The solution: Trust your cultural competency. Use AI as a starting point for research, but always filter recommendations through your understanding of systemic oppression and cultural dynamics.

Mistake #5: Using AI as a Quick Fix for Complex Trauma

Coaching isn't therapy, but many of our clients carry trauma from workplace harassment, family rejection, or systemic discrimination. When coaches rely on AI for guidance around these sensitive areas, things can go sideways fast.

AI might suggest exposure-based interventions for someone with PTSD, or recommend "positive thinking" approaches for someone processing grief. It operates on rules and algorithms, not trauma-informed principles.

The boundary: Know when to refer. Know when to slow down. And never let an algorithm guide you through someone's healing journey. Your training, intuition, and clinical judgment: that's what keeps clients safe.

Mistake #6: Failing to Explain AI's Role to Clients

Transparency builds trust. Secrecy erodes it. Yet many coaches are quietly incorporating AI tools without discussing them with clients. This creates an ethical gray area that can damage the therapeutic relationship.

Your clients deserve to know if AI is analyzing their session transcripts, generating their action plans, or influencing your coaching approach. They especially deserve a choice about whether they're comfortable with AI being part of their journey.

The practice: Be upfront about your AI use. Explain how it enhances (not replaces) your human judgment. Get explicit consent. And always give clients the option to work without AI tools.

Mistake #7: Letting AI Override Your Professional Judgment

Here's the biggest mistake of all: trusting an algorithm over your expertise. I've watched experienced coaches second-guess their instincts because an AI tool suggested a different approach.

AI doesn't have clinical training. It hasn't worked with hundreds of clients. It doesn't understand the subtle art of timing, pacing, and holding space. When coaches defer to AI recommendations that contradict their professional judgment, everyone loses.

The reminder: You are the expert. AI is a tool. Trust your training, your experience, and your intuition. If something feels off about an AI suggestion, it probably is.

Finding Balance: AI as Ally, Not Replacement

Don't misunderstand: I'm not anti-AI. Used thoughtfully, these tools can amplify our impact. They can help with research, organization, and administrative tasks. They can free up mental energy for the deep work that only humans can do.

The key is discernment. In 2026, the most valuable skill isn't knowing how to use AI: it's knowing when not to use it. It's maintaining the wisdom to distinguish between tasks that benefit from automation and moments that require human connection.

Your Next Step

Take inventory of your current AI use. Ask yourself:

Where is AI enhancing my ability to connect?

Where might it be creating distance?

How can I be more transparent with clients about my tech use?

What would happen if I trusted my human judgment more?

The future of coaching isn't about choosing between human connection and technological advancement. It's about using both wisely, ensuring that our tools serve our clients' deepest needs: not just our convenience.

Because at the end of the day, people don't transform through algorithms. They transform through being truly seen, heard, and understood by another human being. That's something no AI can replicate: and something our world desperately needs more of.

Your authentic presence is not just valuable in 2026. It's revolutionary.

Comments